The perils of private politics in open source

The Node community and its leadership is evolving

Node Summit this year was an interesting, and encouraging experience. The stage was full of fresh faces. Fresh faces who weren't there just because they were fresh faces—the project and its surrounding community has genuinely refreshed. A couple of moments in the "hallway track" were instructive as we saw some historical big-names of Node, like Mikeal Rogers, go unrecognised by the busy crowds of active Node users. Node has not only grown up, but it's moved forward as any successful project should, and it has a new faces with fresh passion.

Node.js is a complex open source project to manage. There's a huge amount of activity surrounding it and it's become a critical piece of internet infrastructure, so it needs to be stable and trustworthy. Unfortunately this means that it needs corporate structures around it as it interacts with the world outside. One of the roles of the Node.js Foundation is to serve this purpose for the project.

The inevitability of politics

Politics is an unfortunate fact for open source projects of notable size. Over time, the cruft of corporate and personal politics builds up and creates messy baggage. Like an iceberg, the visible portion of politics represents only a small amount of what's built up over time and what's currently brewing. I had the displeasure of seeing behind the curtain of Node politics as I became more heavily involved, 6 or so years ago. It's not exactly a pretty sight, but I'm certain it's not unique to Node. For the most part, we have a tacit agreement to keep a lot of the messy stuff private. I suppose the theory here is that there's no reason to taint the rosy view of users and contributors who will never be directly impacted by it, and will never even have reason to see it. Speaking about a lot of these things would simply fall into the gossip category, in fact. So we set it aside and move on. But it never really goes away, the pile of cruft just gets bigger.

On many occasions I have watched as idealistic new contributors, technical and non-technical, are raised up to positions where they become exposed to the private politics. It's disheartening to stand by (or worse: be involved in the process), as someone with a rosy view of the Node community is lead, nose first, into its smelly armpits. Of course the armpits are only a small part of the body, so having a rosy view isn't illegitimate if you don't intend to get all up into the smelly bits! But leadership requires a good amount of exposure to the smelly parts of Node.

Many people who step into the elite realms of the Technical Steering Committee (TSC) and Community Committee eventually discover that there's a lot behind the curtains. I use the term "elite" sarcastically here, because that's not what these bodies are intended to be. But the fact of having a curtain creates an unfortunate tendency toward a separateness.

I'm confident that most, if not all, of the people currently on the TSC and the Community Committee, are in open source because they're attracted to open community. That's what's so great about Node and other thriving open source ecosystems. Many of us are in awkward positions where we have a foot in the corporate world and a foot in open source, so we have to learn to operate in different modes. We end up making choices about how we conduct ourselves regarding the community vs corporate and it not easy to stay conscious of the different requirements of our various roles.

There's a danger in being drawn up into these kinds of prominent positions in a complex open source project. We get exposed to the armpit, whether we like it or not. Making matters more complex, we are backed by, and therefore have to interact with, a corporation: the Node.js Foundation. The Foundation is run by a board of highly experienced corporate-political operators (and I mean no disrespect by this—it takes a significant amount of skill to navigate to the kinds of positions in large companies that make you an obvious choice to sit on such boards). Furthermore, the Node.js Foundation is essentially a shell that lives inside the Linux Foundation, a very rich source of corporate politics of the open source variety.

So we are faced with competing pressures: our personal passions for open communities, and the complexities of private corporate-style politics that don't fit very well with "openness" and "transparency". I've felt this since the Node.js Foundation begun—I sat on the board for two terms at the beginning, participating in those politics. But I've also been one of the loudest voices for transparency and "open governance"—a model that I championed prior to it being adopted by io.js, followed by Node.js under the Foundation. But to be honest, my record is mixed, just like everyone else who has had to navigate similar positions. None of us leave unscathed.

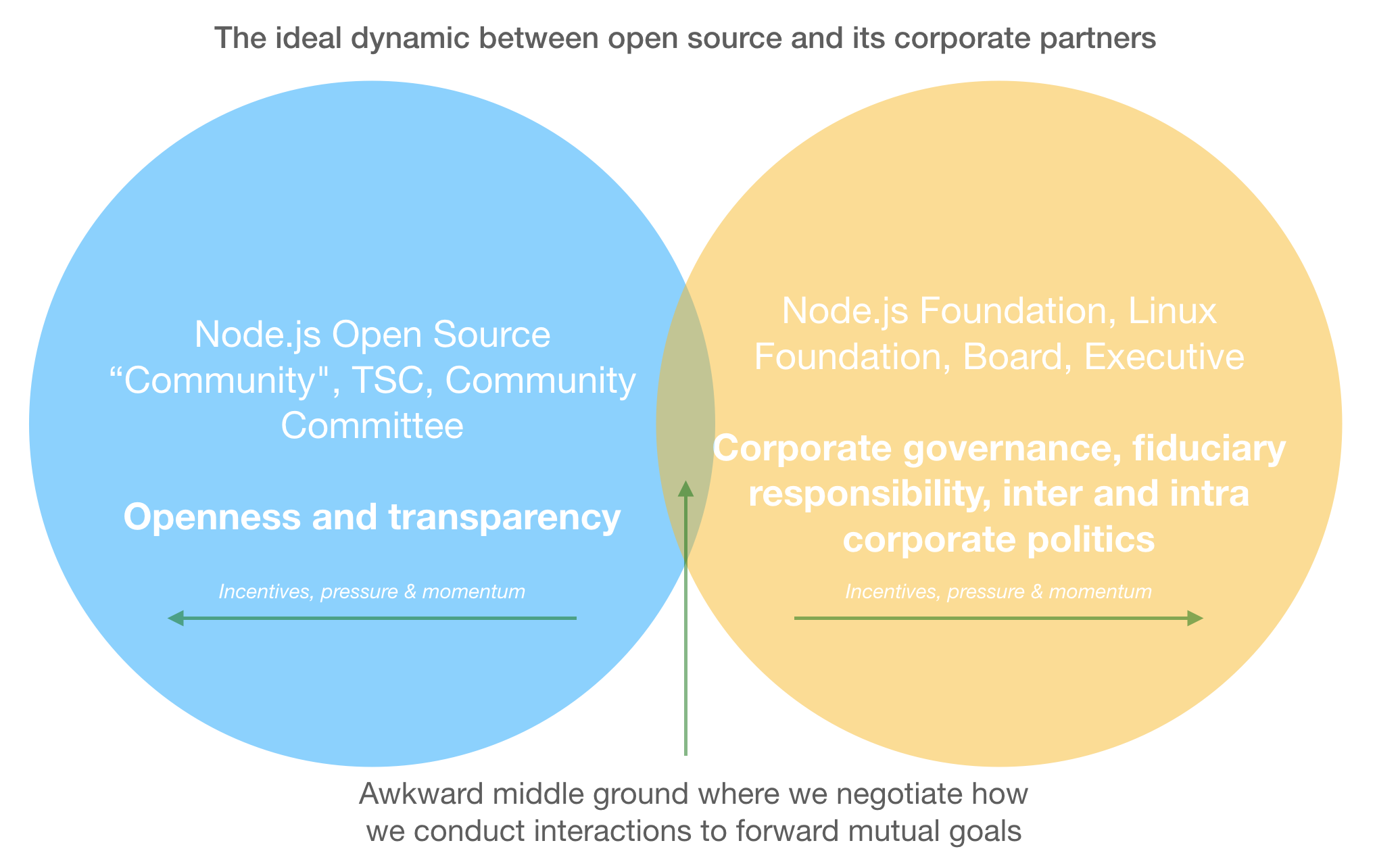

A TSC representative to the board usually wants to be able to report important items to the TSC where they are allowed, and also use the TSC as a sounding-board as they participate in the corporate decision-making process. The dual pressures of "this is sensitive and private" vs "we should be open and transparent" are nasty. Corporate structures and the kinds of politics they engender are not inherently bad, they are arguably necessary in the world in which we exist. In professionalised open source, we are trying to squish together openness and the closed nature of the corporate world which creates a lot of tension and conflicting incentives. It's not hard to understand why many open source projects actively avoid this kind of "professionalisation".

Serving as counter balance to the corporate

Here's the critical part that's so easy to lose sight of: those on the open source side should be the advocates for openness. That's a large reason we get to be at the big table and it's on us to keep the pressure on, to ensure that a tension continues to exist. Those on the other side are advocates (in some cases legally so) of the corporate approach. The lure of private politics is so strong that it will always have momentum on its side, and it takes very conscious effort to push back against it. From discussions during the formation of the Foundation, I know that there are many on the corporate side that expect open source folks to provide this kind of pressure, that's part of the system's design. Perhaps we should even insert our responsibility as advocates for openness and transparency as an explicit feature of project governance.

The temptation to "keep it private", "take it offline", or "get it right before going public", is natural, because it honestly makes many things easier in the short-term, i.e., it's the path of expedience. Our heated sociopolitical environment today makes this worse; with the potential for drama and mob behaviour leading to an understandable risk aversion.

And so we have private mailing lists, private sections of "public" meetings, one-on-one strategy discussions, huddles in the corners of conferences where we occasionally meet in person. We have to keep the wheels turning and it's easier to just push things through privately than have to deal with the friction of public discussion, feedback, criticism, drama.

But that's neglecting what our communities charge us with! Particularly for Node.js, where we have a governance model that makes it clear that the TSC doesn't own or run the project. The TSC is intended to simply be a decision-making fallback.

The project is intended to be owned and run by its contributors. The TSC should be the facilitators of that, and reluctantly involve itself collectively where individual contributors can't find a way forward. The Community Committee is intended take an outward facing role, making for a different kind of challenging bargain when they get sucked in. Today we have various stake-holders asking for "the TSC's opinion", or "the Community Committee's opinion" on matters. I even tried to get that when I was a board member, attempting to "represent the TSC," which I believed was my role at the time. But there really should be no such thing as the TSC or Community Committee's "opinion", it's frankly absurd when you consider that these bodies are supposed to be made up of a broad diversity of viewpoints.

The more we accept private decision-making and politicking, the more we undermine community-focused governance.

A busy time for private politicking

It could be an artefact of my perspective, but it seems that we have a nexus of some very heavy private political discussions happening in Node.js-land at the moment. My fear is that it's the sign of a trend, and I hope this post can help serve as a corrective.

Some highlights that you probably won't see the context of on GitHub include:

- Discussions about the Node.js Foundation's annual conference, Node.js Interactive, which was renamed this year to JS Interactive, and then renamed again recently to Node+JS Interactive. The Foundation's executive decided to involve the TSC and Community Committee in that last decision and it was resolved entirely in private (as far as I'm aware) and then announced to the world. I personally thought the switch to "JS Interactive" was a mistake. I also thought that changing the name again was a mistake. To be honest (and in hindsight), however, I'd rather the executive didn't even draw the TSC and Community Committee in, as collectives, to these private discussions, because we've now become complicit in the private decision making process. It's really not a good look for either committee to be involved in surprise major announcements—that stands in stark contrast to our open decision making processes. Seek individual feedback, sure, but this also goes back to my point about the problems with seeking collective opinions.

- Discussions about the efficacy of the Foundation as an executive body, particularly as it focuses on filling an empty Executive Director (i.e. CEO) chair. I'll admit guilt to fuelling a lot of this discussion myself, I've been a strong critic of the Foundation in recent times. However, those of us with something to say either need to be bold enough to be public with critique, take it directly to decision-makers involved, or butt-out entirely. As an advocate of openness and transparency, I'd suggest that public discussion on these matters would be fruitful because so many people and organisations are impacted by them.

- Discussions about very major restructuring of the Node.js Foundation itself. Having implications that would call for large changes to the by-laws, including changing the very purpose of the Foundation. I don't want to be the one to speak publicly about this, but I would like to see those who are driving this discussion be able to make their case in public sooner rather than later. This will again lead to surprise major announcements that the TSC and Community Committee will again be complicit in. The board should either make such changes and own the responsibility for it, or should set up an open process for feedback and discussion. The TSC and Community Committee should be rejecting the requests made of them to be the source of feedback and discussion prior to major changes. These bodies can facilitate broader discussions, but they are not the source of definitive opinion or truth for the Node.js community.

- Discussions about major changes to Node.js project governance, instigated by parties external to the TSC and Community Committee, entirely in private and with significant political and ideological pressure. Large discussion threads and entire meetings have been devoted to these matters already, without one hint to the outside world that a flurry of pull requests may soon appear with little context. Given some of the ideological content there is potential for more drama so I have a lot of sympathy for people for wanting to take the easy path with this. I'm close enough to the centre of these matters that I will likely write publicly about them soon. I have very strong opinions about what's good for open governance, and maintaining a diversity of opinion and viewpoints on the critical bodies surrounding Node.js. My primary objection to the process conducted so far is that any discussions about change in open source governance by governing bodies must be conducted in the open. And that any private collective change-planning by these bodies undermines the community-focused open governance model that Node.js has adopted.

Finding a balancing point

I'd like to not give private politics any more legitimacy in open source. Leave it for the corporate realm. I'd like for the TSC and Community Committee to adopt an explicit position of being against closed-door discussions wherever possible and being advocates for openness and transparency. That will cause personal conflicts, it will mean difficulty for the Node.js Foundation board and the executive when they want to engage in "sensitive" topics. But the definition of "sensitive" on our side should be different. What's more, we are not there to solve their problems, it's precisely the opposite!

Here's my initial suggestions for guidelines:

- If you want to call something "sensitive" then it better involve something personal about an individual who could be hurt by public discussion of the matter.

- If you have a proposal for changing anything and you're not prepared to receive public feedback and critique, then it's not worth us discussing as groups.

- The TSC and Community Committees should refuse, as much as possible, to be involved as collectives in decision-making processes that must be private for corporate governance or legal reasons. That's not their role and it's unfair to force them into an awkward corner.

The wriggle-room between treating the TSC and Community Committee as "groups" vs pulling individuals from those groups into private politics as a proxy is a tricky one that every individual is going to have to negotiate. I would hope that having these groups explicitly state their preference for openness, while highlighting the risks would go a long way to creating the healthy tension that we need with our corporate partners.

I hope it’s obvious, but this is all my personal opinion and does not necessarily represent that of my employer nor any bodies surrounding Node.js that I’m involved in.